On AI, ML, LLMs and the future of software

I recently left my job at Netlify and have been looking at what's next. At Netlify I became very interested in the power of ML, AI and LLMs in particular, and that's the area I've been looking in. But there's a lot of hype and buzzwords around, so I wanted an explainer I can point people to when they ask "but what is all that stuff really?" It's intended to be short, and so it simplifies a lot on purpose.

AI is (now) a marketing term

When I went to school for computer science 20 years ago, Artificial Intelligence (AI) meant a specific thing: computers that could perform symbolic reasoning about the world, the way humans do. That's now referred to as AGI, or Artificial General Intelligence. Nobody has invented AGI yet; there are still no computers that can think and have an identity the way humans do, although some people suspect we are getting close to that and will be there soon (I'm skeptical).

What there has been is a huge series of leaps forward recently in Machine Learning, or ML. Companies using this tech have tended to call themselves "AI" companies, which is true in that ML is a subset of AI. It's like if companies that made bicycles called themselves transportation companies. But what's ML?

ML is learning from examples

Machine learning involves giving a computer a huge number of examples of something and letting it learn from those examples. For instance, you can give a computer a million pictures of words and tell it what those words are, and thus train it to recognize letters and convert pictures of words into text. If you have an iPhone, that's a feature now built into your photos app: you can copy and paste text out of pictures. Another example is email spam filters: if you give a computer a million examples of emails and label them as spam or not-spam, it gets pretty good at figuring out what spam looks like. You use ML every day.

Because it has a lot of useful applications like those, ML in general has been pretty hot for a few years now. But where the real leap forward has been in the last 12 months has been in the area of Large Language Models, or LLMs.

LLMs are really big Markov chains

If you know what a Markov chain is, it's easy to think of an LLM as a really big one of those, but don't worry, I'm not going to assume you do.

Imagine you made a computer read every book in the world, and then got it to construct a list of every three-word phrase in those books. Then for each phrase, you got it to make a list of all the words it saw come after that phrase and rank them by how often that happened. Then you gave it the phrase

The cat sat on the _______

and then you asked it to predict what word would come next, based on the list it had made. It would almost certainly predict "mat", because in all the English language in the world that's how that phrase is most often completed. Similarly, if you gave it

The capital of the United States is

it would probably give you "Washington, D.C." because again, that's just a really common phrase. But already something interesting is happening, because it looks like the computer "knows" something: the capital. But it doesn't, it's just doing word completion.

Now scale up

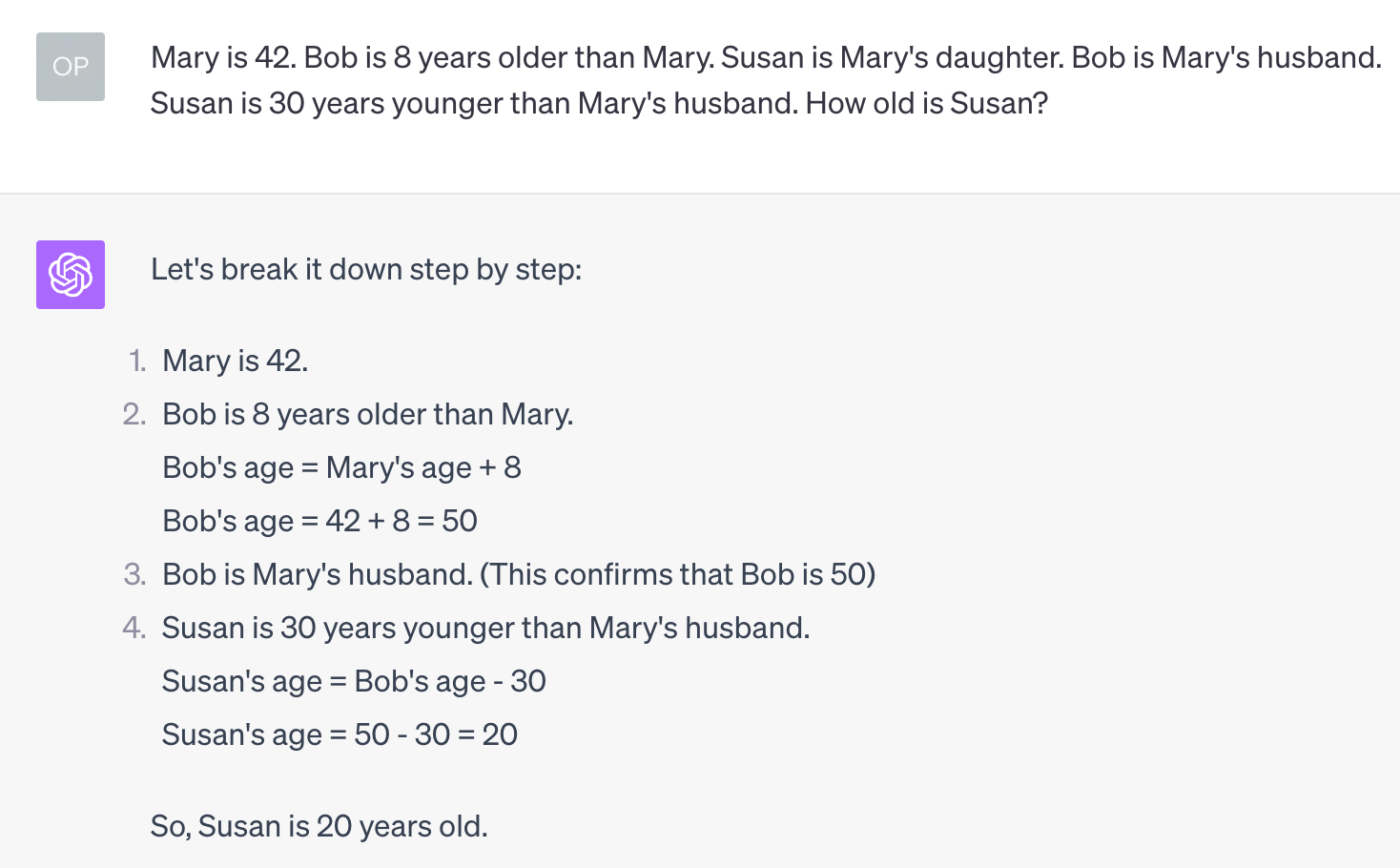

Now take this sentence-completion and scale it up, way up, by factors of millions and you get ChatGPT, the LLM that everyone's been talking about. You can give ChatGPT a question, and it will give you what appears to be an answer, like so:

It not only "knows" that the word should be "mat", but it gives you context about why. But this isn't doing anything fundamentally different. It gives an answer because statistically, answers are what usually follow questions. It gives the context because that context is what often surrounds that phrase. It's still just doing math, and not even very complicated math, just at enormously high scale. It doesn't actually "know" anything.

A quantitative change becomes qualitative

And yet something magical has happened. At some point what it does changed from looking like sentence completion to looking like understanding. What qualifies as understanding things is a philosophical question and a hotly debated one at that, and there are some people who think that computers are beginning to show real understanding here, but most people are on the side that this is still just math.

But whether it's real or just a convincing simulation doesn't really matter: at some point looking like understanding is nearly as useful as truly understanding it.

Giving computers context

Computers until now have been very good at processing data: they can stack up huge piles of data, sort it, filter it, move it around, spit it back out, all at enormous scale and impressive speed. But what they haven't been able to do is turn data into information. They haven't been able to understand what they're working with. But now, if you give a computer context, it can appear to reason about that information:

It figures out a conclusion from a related set of facts, namely how old Susan is. What's really interesting here is that this set of facts are not part of the set it was trained on. When it read all the books in the world, Susan's age wasn't in there. In fact, I never told it Susan's age. At some point along the line, prediction of the next most likely word became reasoning. This is the surprising and frankly spooky outcome that has made LLMs so interesting to me and many other people.

Computers that understand changes everything

There are a bunch of really neat things you can do with this new-found ability of computers to read information and understand it. Give it your technical documentation, and then ask it questions about how to do things. Give it a pile of legal contracts and get it to tell you what they do and don't allow you to do. Give it a pile of research papers and get it to summarize them and find connections between them. Give it access to your company Slack and get it to summarize everything that happened in the last two weeks while you were out on vacation.

LLMs are going to end up in everything

This transition of software from being a tool that we use to a sort of low-level assistant that we can partner with is going to change every piece of software out there. Software that doesn't have the ability to understand what it's working with is going to begin to feel strangely limited, even broken. It's a very exciting time to be working in software, and it's why it's what's next for me.